In the world of accessibility, Twitter and Facebook both made moderate waves with new features debuted in the past few weeks. Microsoft is also creating and will unveil an even greater advancement in the near future.

In Twitter’s quest to become the simplest and most ubiquitous sharing platform, they have taken a big step to include people with visual impairments. Twitter has recognized that photo sharing is at the center of the Twitter experience and that in order to be actively used by as many people as possible, they would need to provide text alternatives for photos.

Before this week, anyone viewing Twitter through a screen reader would have to hope that someone posting photos, videos, or audio would use their caption space to describe (rather than comment on) the post. As you can image, that does not happen regularly.

Now, users have the option to include alternative text with the images they post. While this is potentially a huge step for many users, it necessitates general widespread adoption. Like all social media, the individuals uploading content need to be aware of the possibilities and then actually implement the tools available to make it accessible to everyone.

Not to be outdone, Facebook announced their own new development a week after Twitter’s. With help from their first blind engineer, Facebook has developed an artificial intelligence software that will speculate alternative text for images. Automatic alt text uses “advancements in object recognition technology” to add information about the photograph for the screen reader. People using screen readers on iOS devices can hear a list of items that may be in the image.

This technology is not perfect but it is a major step forward for people who rely on screen readers to use the Web. Now when you post a picture of you and your family together, a blind friend will be told that the photo “may contain three people, smiling, outdoors.” That vague description goes some distance in helping a blind user understand the context of your post. If you have an iPhone, turn on VoiceOver and try navigating Facebook to see what you think.

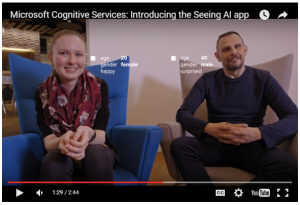

While Twitter and Facebook have rolled out new features, Microsoft has promised to unveil a real game changer in the near future. Their project, ‘Seeing AI’, will use your phone’s camera and language processing to describe a person’s surroundings. The app they are creating will be used as a smartphone app or with Pivothead smart glasses. You will be able to take snapshots of the world around you with your phone or these glasses, and the app will speak a description to you.

While Twitter and Facebook have rolled out new features, Microsoft has promised to unveil a real game changer in the near future. Their project, ‘Seeing AI’, will use your phone’s camera and language processing to describe a person’s surroundings. The app they are creating will be used as a smartphone app or with Pivothead smart glasses. You will be able to take snapshots of the world around you with your phone or these glasses, and the app will speak a description to you.

Microsoft is pushing the ability of this app to recognize human minutia in the photographs to include estimations of people’s gender and disposition. It will also recognize many everyday objects and can even read text. So a blind user could better navigate his/her way to a restaurant and then have the menu read to them by their phone or glasses. This product does not yet have a release date but it was recently debuted at the company’s Build conference and will hopefully enter the market sometime within the coming year.

Tags: Accessibility, Facebook, Social Media, Twitter